Kappa

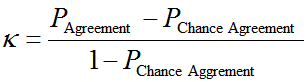

Kappa is a coefficient that measures the proportion of agreement above that expected by chance. kappa calculations are shown below.

Notes on Kappa

- Kappa may be used as a general measure of agreement for Nominal data

- Calculation methods exist for two or more categories, two or more repeated assessments, and one or more appraisers

- Tests for the significance of kappa are available

- Confidence intervals for Kappa are available

- Kappa can vary between a -1.0 (complete disagreement) to +1.0 (complete agreement) for symmetrical tables

- Negative kappa values indicate the level of agreement was below that expected by chance

- The maximum value of kappa is a function of the symmetry of the table and the differences in the category proportions between appraisers

Calculations

— One Appraiser

— Two Categories

— Two Repeated Assessments

M = Number of Repeated Assessments

C = Number of Categories

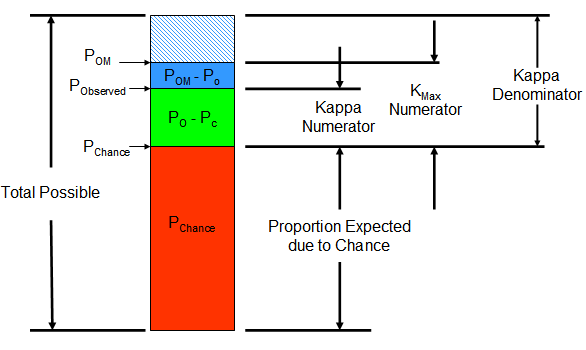

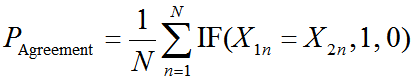

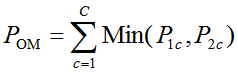

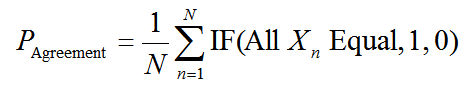

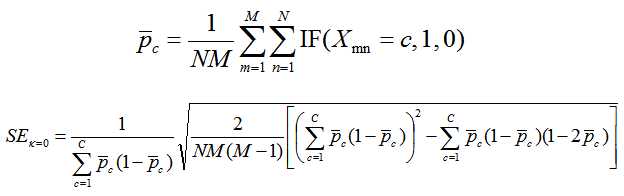

Proportion of items in which agreement occurred

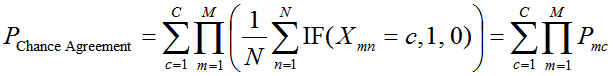

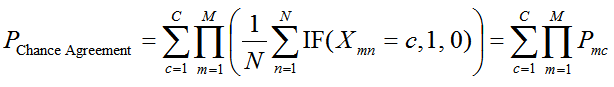

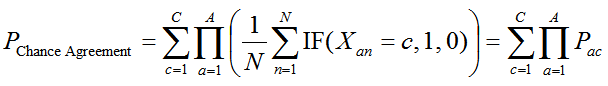

The sum of the products of each classification proportion

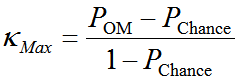

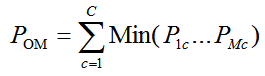

The maximum value of kappa given the observed lack of symmetry

- KMax is the maximum value kappa can attain if the only disagreement is that found by the lack of symmetry

- KMax is one minus the proportional difference found off diagonal

- KMax will equal kappa when the number above or the number below the diagonal is zero

- 1-KMax is the loss in agreement above chance due to non-symmetry

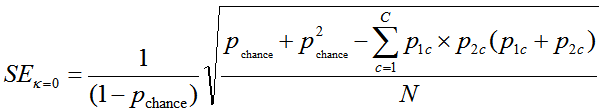

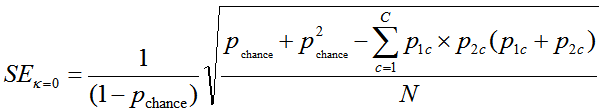

The standard error to test if kappa is equal to zero (No Agreement)

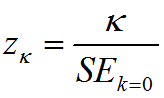

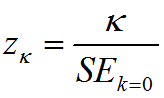

The significance of kappa is tested with a z-score

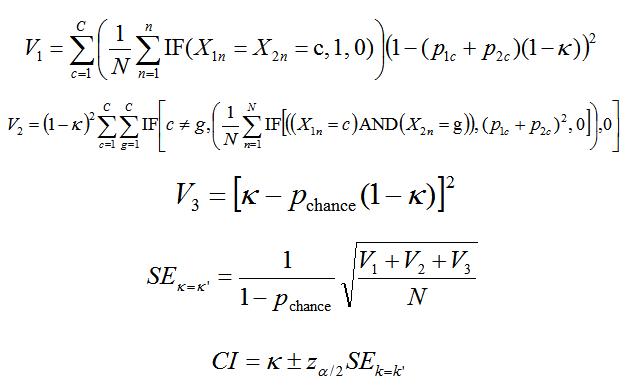

kappa Confidence Interval Calculations

— One Appraiser

— Two Categories

— Three or More Repeated Assessments

Proportion of items in which agreement occurred

The sum of the products of each classification proportion

Used to calculate kappamax

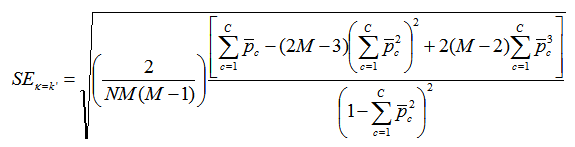

The standard error to test if kappa is equal to zero (No Agreement)

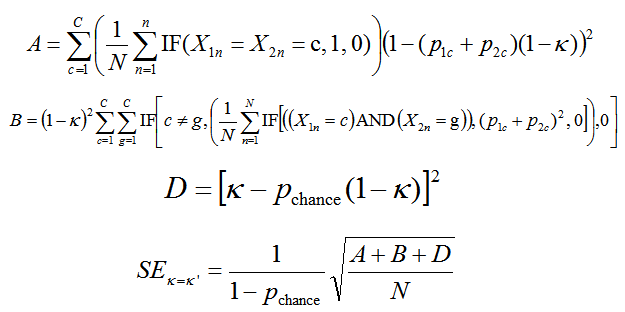

The standard error used for kappa confidence intervals

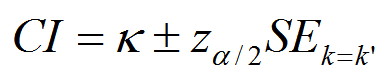

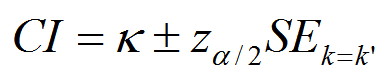

kappa confidence interval

— Two Appraisers

— Two Categories

— One Assessment Each

A = Number of Appraisers

C = Number of Categories

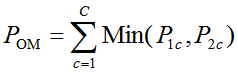

Proportion of items in which agreement occurred

The sum of the products of each classification proportion

The standard error to test if kappa is equal to zero (No Agreement)

The significance of kappa is tested with a z-score

The standard error used for kappa confidence intervals

kappa confidence interval

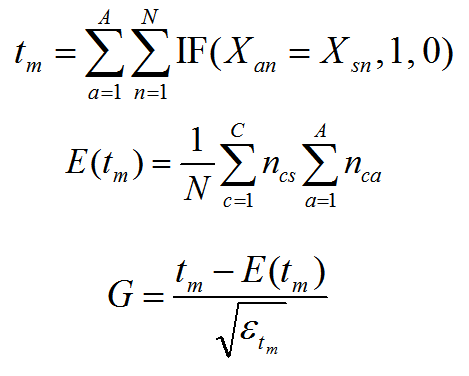

G Index

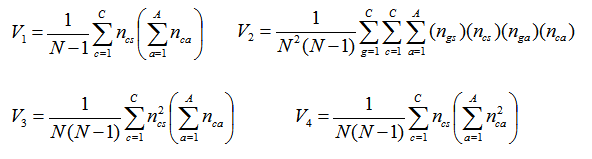

nxy = The count of Category x, for Appraiser y or Standard s

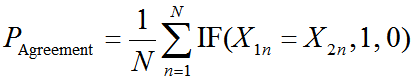

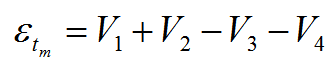

This is the statistic used to test for overall Concordance.

It is evaluated as a z test statistic.